Is It Time to Just Say No To Cross Browser Testing?

Everyone is using the same browser anyway. According to StatCounter via Wikipedia, https://en.wikipedia.org/wiki/Usage_share_of_web_browsers, Chrome and Safari have 85% of the market share, and are the only browsers with more than 5% share. That's right. All that work and expense to make sure your site works in IE is for 2% of users. Edge? Another 2%. The percentage of people who think the moon landing was faked, 7% according to https://www.livescience.com/51448-startling-facts-about-american-culture.html, is higher than the percentage of people using Firefox and Edge combined.

Suppose you're running all your tests against Chrome, Firefox, and IE. Then 66% of your test execution expenses are going to support 6% of your users.

Browsers are becoming more and more alike underneath the hood. This has been happening for a while. KHTML was used in Chrome, Safari, Opera, and Edge. But take a look at the latest Edge browser. It isn't just like Chromium under the hood. It actually is (https://www.theverge.com/2019/5/6/18527550/microsoft-chromium-edge-google-history-collaboration).

Javascript frameworks are doing a better job of abstracting away the differences that still exist. Take jQuery for example. jQuery is supported in the following browsers:

- Chrome: (Current - 1) and Current

- Edge: (Current - 1) and Current

- Firefox: (Current - 1) and Current, ESR

- Internet Explorer: 9+

- Safari: (Current - 1) and Current

- Opera: Current

- Stock browser on Android 4.0+

- Safari on iOS 7+

If your job is to create a javascript framework that others will use, then cross-browser testing is probably a good idea. But if your job is to test a website for a startup that uses jQuery, your time would very likely be better spent making sure that your site works in the most common browser your customers use.

Cross-browser issues tend to be really big or really small. Cross-browser issues seem to fall into three classes.

There's framework level stuff (e.g. Ember wasn't designed to run on IE 6, Silverlight probably wasn't a great choice for running on Firefox). The best way to find these issues is by doing your homework when adopting new frameworks, thinking about who else is using them / backing them, and try them out at design time.

There are issues that only happen in one browser (e.g. document.baseURI isn't supported in IE). These are often web-only changes that can be quickly fixed and deployed. When an issue is functionally working in other browsers, it seems less likely that the fix is going to be something that's extremely complicated and takes days.

There are cosmetic things (e.g. that CSS renders a bit differently in IE vs Firefox). These are actually really annoying. But unfortunately they're also not caught by most forms of automated testing.

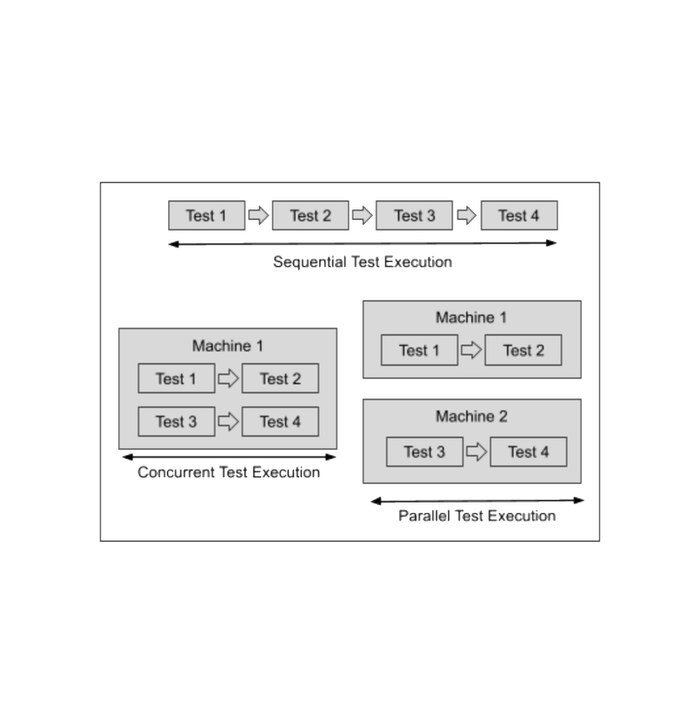

Running your regression suite across multiple browsers can significantly increase the cost to write, maintain, and execute tests. It's not uncommon to encounter different behaviors when Selenium interacts with different browsers. How are popups handled? What about tabs? File downloads? These browser-specific behaviors mean that writing tests that will run across multiple environments is more expensive.

Additionally, how much it costs to run the tests goes up. 5 x the browsers can mean 5 x the cost to run tests. Even though modern platforms like Testery can help you leverage containers to keep costs down, the tests have to run somewhere. Even if you have a bare metal server sitting around unused, you're still paying for the ping, power, and pipe.

But perhaps the biggest cost is time spent reviewing test failures. Running tests across browsers can result in a significant amount of time reviewing test failures that don't result in defects.