Testery Orchestration - What is it?

In AI and machine learning, classification algorithms classify data into predefined categories or classes. Whether it's spam email detection, medical diagnosis, sentiment analysis, or any task where you want to categorize data, classification algorithms are at the heart of it. Two key metrics used to evaluate the performance of these algorithms are accuracy and precision. In this article, we will show how to write automated tests that evaluate the accuracy and precision of these models.

What is a Classification Algorithm?

A classification algorithm is a supervised machine learning technique used to assign predefined labels or categories to input data. The primary goal is to create a model that can accurately predict the class to which a given data point belongs. The algorithm "learns" from a labeled dataset during the training phase and then makes predictions on new, unlabeled data. There are various classification algorithms available, including logistic regression, decision trees, random forests, support vector machines, and neural networks.

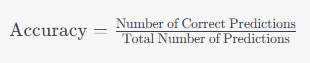

Calculating Accuracy

Accuracy is a fundamental metric for evaluating classification algorithms. It measures the percentage of correctly classified instances out of the total instances. The formula for accuracy is:

Here's a breakdown of how to calculate accuracy:

Obtain Predictions: Run your classification algorithm on a test dataset, and it will make predictions for each data point.

Compare Predictions with Actual Labels: For each prediction, compare it with the true label from the test dataset.

Count Correct Predictions: Count the number of predictions that match the actual labels.

Calculate Accuracy: Divide the count of correct predictions by the total number of predictions to get the accuracy value.

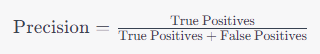

Calculating Precision

Precision is another crucial metric, particularly when dealing with imbalanced datasets. It quantifies the ratio of true positive predictions to the total number of positive predictions (both true positives and false positives). The formula for precision is:

Here's how to calculate precision:

Obtain Predictions: As in the accuracy calculation, run your classification algorithm on a test dataset to get predictions.

Calculate True Positives and False Positives: Analyze the predictions and actual labels to identify the true positives and false positives.

Compute Precision: Use the formula to determine the precision value.

Practical Example: Calculating Accuracy and Precision with Python

Now, let's put theory into practice with a real-world example using Python, pytest, and pandas. We will calculate accuracy and precision for a simple binary classification task.

Step 1: Install Required Packages

You need to have pytest and pandas installed. You can install them using pip:

bashCopy codepip install pytest pandas

Step 2: Write the Test

Let's create a Python script to calculate accuracy and precision for our classification algorithm. Save this as test_classification_metrics.py:

pythonCopy codeimport pandas as pd

from your_classification_algorithm import classify_data # Replace with your actual classifier

def test_classification_metrics():

# Load your test dataset

test_data = pd.read_csv('test_data.csv')

# Make predictions using your classification algorithm

predictions = classify_data(test_data)

# Calculate accuracy

correct_predictions = (predictions == test_data['actual_labels']).sum()

total_predictions = len(test_data)

accuracy = correct_predictions / total_predictions

# Calculate precision

true_positives = ((predictions == 1) & (test_data['actual_labels'] == 1)).sum()

false_positives = ((predictions == 1) & (test_data['actual_labels'] == 0)).sum()

precision = true_positives / (true_positives + false_positives)

# Define accuracy and precision thresholds based on your problem requirements

accuracy_threshold = 0.85

precision_threshold = 0.90

# Assert that accuracy and precision meet your criteria

assert accuracy >= accuracy_threshold

assert precision >= precision_threshold

Step 3: Run the Test

Execute the test using pytest:

bashCopy codepytest test_classification_metrics.py

This test calculates and evaluates the accuracy and precision of your classification algorithm, helping ensure your model meets the desired performance criteria.

Why This Works

The method described here is essentially what most data scientists do when they are training the models and evaluating them. In general, a model with higher precision and accuracy is better than one with lower values.

The reason to have automated tests for this is in many systems, the research model and production models might not be exactly the same (e.g. when the production model needs to be able to run at scale, when one is written in r and the other in Java, etc.). Automated tests can catch this.

Another reason is that production data can change over time, resulting in models that drift and need to be retrained. Having automated tests like this can really help!