Measuring Behavioral Test Coverage with Google Analytics

Measuring behavioral test coverage is essential to understanding what paths have tests and what paths don't. If your marketing team thinks a path in the application is worth measuring, it's a really good sign it's worth having an automated test for.

Measuring behavioral test coverage (what paths in your site are covered by end-to-end tests) is essential to understanding what paths in your site have tests and what paths don't.

Because the system-under-test and the tests are often running on different systems, traditional approaches to measuring code coverage (i.e. instrumenting the system-under-test on localhost and running unit/integration tests) are more difficult to set up and might not even be an option.

Fortunately, analytics tools like Pendo (https://www.pendo.io/) or Google Analytics (https://analytics.google.com/) can really help you understand what is and isn't being tested. Here's how.

1. Add tracking tags to your both your test site and your prod site. Chances are you already have prod tracking tags for Google Analytics, Pendo, etc. Get another tag added for your QA site as well. Make sure that you can distinguish between test and prod traffic (either by using a different tag, customer profile, etc.).

2. Run your end-to-end regression tests as you normally would. As your tests are hitting the QA site now, you should see live data coming in with your analytics tool.

3. Extract the data. For the most basic analysis, all you need is the list of sites that were visited. In Google Analytics, you can get this from Behavior -> Site Content -> All Pages and then clicking Export.

4. Analyze the data. There are several useful things you can do with this data.

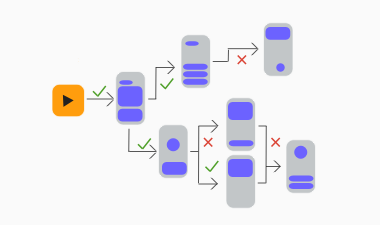

If you've extracted the list of sites, the first thing you will want to check is to do a diff of the site lists between prod and test. If your regression tests don't come back with 100% of the pages that show up in prod, that's an indicator that there are gaps in your testing. If it's less than 100%, you can prioritize based on business importance of each page and/or how many of your visitors are hitting that page. A word of caution though... 100% doesn't mean you have tested everything that should be tested. In general, each page should participate in more than one scenario. The purpose of this type of analysis isn't to guarantee completeness of testing so much as it is to have a very easy way to identify major gaps.

If your analytics tool supports more advanced user analytics like tracking goals/conversions, user paths through the site, etc. this information can be incredibly useful and can help you ensure even better coverage. If your marketing team thinks a path in the application is worth measuring, it's a really good sign it's worth having an automated test for.